You may have noticed that my blog hasn’t been updated nearly as much this semester as it has been in those last year and the year before. One of the reasons for this has been the Languages and Compilers assessed coursework, which has taken up quite a bit of time.

The aim of the coursework was to build a compiler, a bit of software which converts from a “high level” human readable language to a “low level” language a computer can execute, for a completely made up language called SPL – which stands for Simple Programming Language.

Formalizing the Grammar of the Language

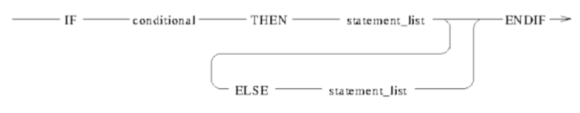

The first thing we had to do was formally specify the grammar of the language — the grammar of a language specifies what is, and isn’t, valid in said language — we did this by reading some syntax diagrams provided to us by our lecturer, such as the one above, and then writing out Backus-Naur form notation to describe it. The image above in Backus-Naur form is notated as:

<if_statement> ::= IF <conditional> THEN <statement_list> ENDIF |

IF <conditional> THEN <statement_list> ELSE <statement_list> ENDIF

Items in <brackets> are other rules, so there would be another rule which describes the syntax of “conditional” and “statement list”. Using multiple rules together allows us to build up a complete syntax for the language.

The Tokenizer

Once we had developed a complete BNF grammar for the language we could move on to building the compiler itself, this was especially interesting for me because my final year project uses a lot of the same concepts.

The first stage of building the compiler was building a tokenizer. A tokenizer, as I’ve spoken about before, is a system which recognizes certain keywords, or patterns, and gives them a label which can then be used later on in the compilation. For example the C# code:

public static void main()

{

int a = 10 / 2;

}

Consists of the following tokens:

- Public Keyword

- Static Keyword

- Void Keywork

- Identifier Pattern main

- Open Bracket (sometimes referred to as BRA)

- Close Bracket (sometimes referred to as KET)

- Open Curly Bracket

- int keyword

- Identifier Pattern a

- Symbol =

- Integer Pattern

- Symbol /

- Integer Pattern

- Semi Colon

- Close Curly Backet

We used an open source version of the Unix program lex, called flex, to build our tokenizer (in my FYP I’m building a tokenizer from scratch). In our flex file we recognized tokens using regular expressions. Matching something like a keyword is rather simple, you just look for that literal string, however looking for an integer is a tad more interesting. Below you can see a few examples:

/* Primitives */

digit [0-9]

number {digit}+

/* End: Primitives*/

//Public keyword

public TOKEN(public);

//Integers (e.g. whole numbers)

{number} TOKEN(integer);

//Reals (e.g. Numbers with decimal point)

{number}.{number} TOKEN(float);

On the left hand side of the token recognition is the pattern that has to be matched. For the word public it is the literal string public, for integers it is a {number} and for a real it is a {number} a full stop and then another {number}. {number} has been declared previously to be 1 or more digits, and a digit has been declared before that to be any number between 0 and 9.

The tokenizer takes a .spl file, which contains spl code written by a programmer, and spits out an array of tokens which we can then use in the next stage.

The Syntax Parser

Once we’ve finished building our tokenizer we could then go on to building a syntax parser. The job of the syntax parser is to ensure that the array of tokens provided by the tokenizer are in an order which makes sense. To produce the syntax parser we used a free program called Bison, which is based on the Unix program YACC.

This is where we go back to the Backus-Naur Form we made previously. Using that, with some alteration, we produced a .y file which, once passed through Bison, could identify correct grammars in the list of tokens passed to it — and tell the programmer there was an issue with their code if anything was detected as incorrect.

Correct grammars were added to a parse tree, in hierarchical order. As we saw earlier, an IF statement contains a conditional, so that became one of the branch nodes of the tree which represented an IF statement.

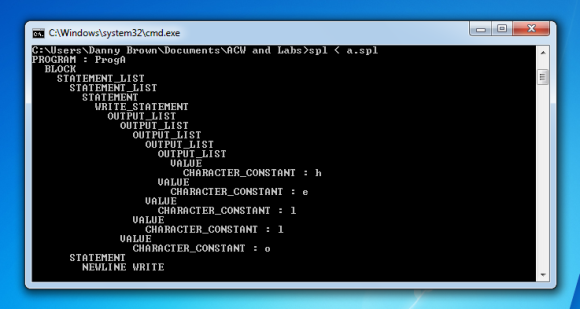

At this point we could print out the parse tree, my software could print both to the console and to an interactive animated viewer. Below you can see both:

Code Generation

Once we have determined that the input file from the programer is syntactically correct we need to generate executable code. For this coursework instead of generating an executable directly we generated ANSI C code, and passed that to the GNU C Compiler. We did this for a few reasons:

- Generating machine code directly wouldn’t be as portable

- Generating machine code would take a lot longer!

- Learning ANSI C was really useful, as its the number 1 most used programming language in the world

Generating code was a simple case of walking the parse tree and generating code for each node… for example, if you reach an IF THEN ELSE node you could simply output the following:

if(/*Generate code for the CONDITIONAL here*/)

{

/*Generate code for the IF statement list here*/

}

else

{

/*Generate code for the ELSE statement list here*/

}

Ensuring you generate code for the conditional and statement lists in the correct sequence. Certain types of grammar throw up a few problems, such as FOR LOOPS (which way should the iteration go?) but the concept of code generation from a tree in itself is simple.

Optimization

The final part of the coursework was to add some optimization to the compiler. Some optimizations you could implement included:

- Don’t compile the code if it doesn’t contain and input or output (if a program has no input or output, it looks the same as having no program at all!)

- If a programmer increments a variable by 1 generate the ++ operator rather than +1

- Constant Folding: If a variable is assigned to the outcome of a constant set of values. e.g. a = 4/2, then generate a = 2 because this will always be the case

I chose to do both the incrementation optimization and the no input or output optimizations. I was going to add constant folding too, but didn’t think I had enough time.

Conclusion

Today I got my grade for the coursework and achieved 80%, which is a strong first class. I’m really happy with this. 🙂

I would recommend that any second year thinking about what modules he or she should take next year seriously consider Languages and Compilers, it is by far the best module I have taken. It combines knowledge from all your previous modules and really cements that information in your head.

I’d like to thank both Dr. Martin Walker and Eur Ing Brian Tompsett for setting such an interesting coursework, and providing me with the help that they did along the way.

If I’m allowed I might upload my compiler and some documentation for SPL for people to have a play with it.

Danny